Introduction:

Our first project of studio 3 is to make a VR game about mindfulness that is compatible on two platforms. For the two platforms we are given the Oculus Rift & Oculus Go to work with.

Two of the game pitches were chosen by the group and the person behind the pitch idea became the producer for that game. The rest of us are contracted out by the producers to work on each project.

The two projects are:

- Candy sorting: A game about combining candies and feeding them to a little pet.

- Campfire: A game about enjoying the night wilderness alone.

We have just 4 weeks to finish these projects, so needless to say things are going to be busy.

As i will be bouncing between projects depending on needs of the producers, instead of making separate blogs for each, i’m going to just talk about what i did each week for each.

Week 1:

This will be the format for the later weeks however not much to say right now, considering our week started on Tuesday and the end of the sprint was Thursday. We didn’t get much time to do things as most of the week was taken with pitches.

Candy:

VR Setup:

Set up the VR into the project ready for the designers.

Candy colour merging:

This weekend i will be researching on the best way of doing this, might involve some shader work but not entirely sure yet.

Campfire:

VR Setup:

Set up the VR into the project ready for the designers.

Week 2:

This week has been pretty eventful with the designing of systems and implementation of them. The designers have already added large amount of assets are already in the game as well, here is my contribution to the separate projects.

Candy:

Candy colour merging:

I knew were going to have some issues here, the designers wanted a colour merging system similar to real life (subtractive) while computers uses a different system (additive).

After some research into the subject i found a formula to get better looking colours after they had been combined (check out this video here). So that was reasonably simple,

although it still didn’t solve the issue of additive light, i tried to find answers to this but it seems intrinsically complicated to convert subtractive to additive. So what was the next best thing i could do? fake it.

So I would check if the colour combination turned out to be white and convert those RGB input colours to HSV.

So I would check if the colour combination turned out to be white and convert those RGB input colours to HSV.

The colour combos for two colours that make white are:

Yellow + Blue, Yellow + Cyan, Yellow + Magenta, Magenta + Green, Magenta + Cyan, Red + Cyan.

So knowing the Hue i could then check if the colours are between a certain colour range and if they were spawn, as the colour they are supposed to be when using subtractive.

Candy Machines are interactive:

The designers had attempted to create interactive buttons for VR but instead had just used on mouse click, but it got me thinking, this is VR, why don’t we make things a little more interactive.

The machines already had ‘buttons’ (by that i mean a cube). So i hollowed out a space in the machine behind the button and placed a collider there with a spring joint connecting the back plate and the button.

This way you can have a physical button that you can push and might prove to be a little more satisfying.

Campfire:

Interactives:

There was a few things needed here:

- A simple raycast from the left controller to identify objects and control objects

- A state system for the stick

- The state system was just identifying

- whether or not that it was being used as a vessel for a marshmallow to be roasted (instantiate to an anchor point)

- whether it was being used as a sparkler to write in the air (active a trail renderer)

- The state system was just identifying

Shaders:

Currently this needs two shaders:

- Fire

- Sparks left behind by the trail renderer

My goal next week will to hopefully have one of these finished and implemented into the project, however right now i still need to do some research

Week 3:

The two projects this week are in very different states, this was largely due to scale and scope for the projects.

They both should be at the point now where everything is put into the game and we are polishing and bug fixing issues.

However this is not the case for both games.

Campfire is very nearly at the point of completion, while candy still has a lot of work and things that need to be fixed.

My workload in each is reflective of the amount of things that need to be fixed / linked in each project.

Candy:

I overhauled the interactive system this week, this was due to a couple of reasons, one, raycasting to things was a constant expensive operation two, we could easily achieve the same results using a collider system and three the designers didn’t want a character moving system.

Interactives Update:

Grabber:

The original intent was to pick up objects with your hands and for the go use a claw grabber. However without the correct scaling on the rift or having movement grabbing objects with your hands is impossible for some distances. Because of this we are currently just using the mechanically grabber

Controls:

Because the the stick has to cover a variety of distances (close, far and everything in between) the stick can be extended or shrunk with the go click and back button.

Because the input of this is a string read through the XR system, it didn’t translate well to other device (See device detection)

Picking up/ putting down:

This was originally handled by childing the candy object to the stick, turning off gravity and making it kinematic. However this came into some issues, the candy would take the local transform of the stick, easy to fix i thought i would just modify the local transform when its picked up to be the correct scaling. Sure enough this worked for builds in unity however in the device this didn’t work (what the hell?).

So after spending a decent amount of time debugging this issue, decided there would be a different way and instead of childing, just changing the candy’s transform position to the grabber.

Buttons:

This was never specified, so i just made an object with a collider on it that detected the grabber, simple and does the job.

Device detection:

Due to the input differences using the XR input system, i implemented a function on awake which just checks the device and checks to see if that string contains either “Rift”or “Go” from there it modifies a set of strings which are then used for the input instead

Campfire:

Much like with Candy, I overhauled the interactive system this week as well

Interactives Update:

Stick:

The stick no longer raycasts but is instead just checks trigger colliders and strings, then changes the current state of the stick to that state.

While this is not a state machine, it helps to improve clarity on the state as opposed to a set of bools.

The stick can only be in one state (ie. marshmallow or fire writing)

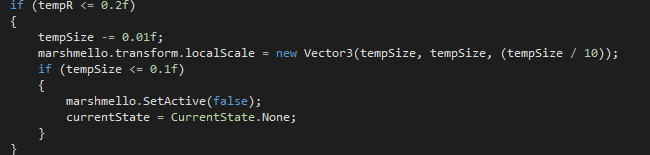

Marshmallow:

Activation:

Previously an object was being instantiated to the tip of the stick, now instead we are just turning on and off a model.

Realism:

While the marshmallow is in the fire, it slowly chars

eventually once its fully charred, it reduces in size till it eventually disappears.

Then all we have to do is reset the changes to the marshmallow when we activate it again

Sky:

This went through a process of two iterations based on the designers wants.

Previous set up:

The previous set up just checked the position of the stick compared to the transform.x of the player and then also checked head rotation on whether it should flip those values or not (ie. the player was looking behind and trying to control the sky).

However this set up meant that the player could just click and hold to rotate the sky which i can say from testing, definitely induced motion sickness.

Current set up:

The current set up now checks the transform position against its last recorded position, which is recorded after pressing the trigger.

This gave the appropriate results however it would constantly update and pick up the smallest movements in your hand, so i added an offset so that it wasn’t too sensitive.

Do this action seemed to drop the frames a decent amount, so i started debugging and stripped back the code to find the issue, ends up that the SetFloat action is the one using a large amount of memory.

My plan is to show the designer tomorrow and get there opinion on how bad it is, worse comes to worse we need to look for an alternative solution than rotating the texture.

Week 4:

This was the final week for the in the moment projects and there was a lot that needed integrating together fixing and testing before the intended release date on Friday. I worked particularly hard to make sure this was a success.

Candy:

UI:

Interactive elements in UI work quite differently depending on the software you are working with so it took some research into the OVR systems to figure out how to do this (the OVR API for unity is like 3 versions behind as well which didn’t help).

It ended up being reasonably simple:

- Change the canvas to world space

- Add the OVR Raycaster component to the canvas

We had issues with this working on the Rift but it worked perfectly with the Go. This was due to the way that the OVR input handled its input commands.

This was because we were using the XR strings in the GetAxis function which was being passed through a GetButtonUP in the OVR input model.

Which was erroring out, however the gaze pointer had an exception for the GO and unity that used a virtual mouse position instead (which is why it still worked) while the Rift did not.

We fixed this by instead holding the last axis value and the current axis value to tell whether or replicate button up for axis instead.

The designers had put this in another scene but i integrated it into one of the models in the game and had another camera rig that dealt with the menu only and then swapped out to the actual game rig.

Which came out looking way better

Stable bunny:

Oh boy the bunny, the bunny was meant to be an interactive character that jumped around on the desk and chased the candies. But the programming for this was prone to many errors which either looked terrible or just straight up crashed the game.

The crashing of the game was due to the bunny looking for GameObjects in a list but also deleting things from that list mid while it was using functions.

While the other things that look terrible was the bunny heading towards a position and to the exact location (ie. it would get attached to candies and there rotation).

So with a week to solve this, i initially looked in fixing the previously existing code by creating exceptions to hopefully try and stop it erroring out however after an hour i found i had made no progress and just scrapped the current version and made a new “stable bunny”.

The movement was removed from the bunny and it was split into three functions.

- Deciding what candy colour it liked

For this i just gave it a high and low hue value for it to check.

- Detecting the candy

This was just a on OnTriggerEnter in front of and on the bunny which took the colour and converted it to HSV and then passed the H value into another function.

I removed the physics from the object it was eating so, it wouldn’t roll around and ultimately onto the floor which would delete it.

- Detecting the Colour

This just passed in the value of the colour and then played appropriate animations depending on whether it liked that colour. I timed this myself with a coroutine (i find this easier than working with the Unity animator)

This resulted in a functional non moving bunny that caused no issues.

Grabber:

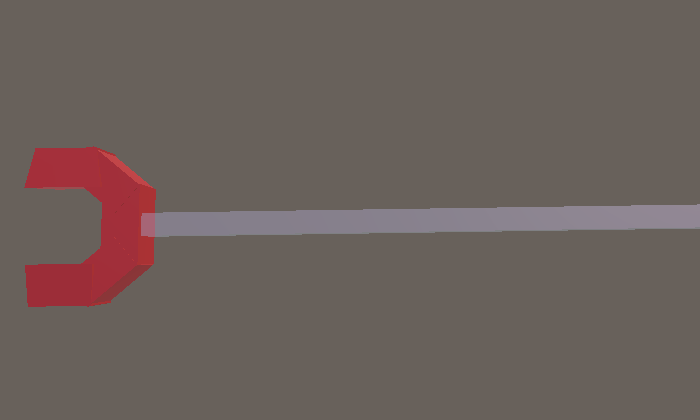

I asked if the designers could make a grabber for the game as we were just using a extended cube previously and all they did was add a see through texture to it, which wasn’t what i had in mind. So i created five cubes in blender and made a claw

and then made another model of them just pinched together,

i then toggled between these two models depending on if the player had picked something up or not.

Audio:

Some of the audio had already been decided by one of the designers and they had made a audio manager system for it as well. i just had to connect up the actions to the our existing scripts and i added some changes as well, including a few different sounds and a change in pitch on objects like the glass beakers depending on their size.

Campfire:

Interactive Update:

While it was always intended to have different controls between the Rift and the Go it wasn’t fully decided until the last week.

This meant the existing systems that i built (which were never made to be broken apart) needed to be split.

For this i required first to know what device they were using, so i made a device detection (See next section).

Then it choose those controls and activate the appropriate systems.

I made a copy of the previous stick and removed the model so that the player had a identical controller to the stick, then used much of the same functionality it had as the main controller for the GO but then split out the parts that were made for the stick only.

Previously the current state of the stick was tracked but now that this was split between systems i created an independent system that tracked the state for both the new systems.

I also had to add new functionality with the marshmallow now spanning to the hand instead of the stick and then being able to place it on the stick.

and then detecting whether the hand was touching the tip of the stick

Device Detection:

So because we needed the two separate control systems for the devices, the system had to be able to distinguish between the devices. For this i created a device detection script which detected the current device and check that string to see if it contained the word “Rift”or “Go” and then altered the position of the camera and the control systems to that device.

UI:

I hooked up the UI and created a scrolling script for the credits, much like with candy i moved it from a separate scene to the main scene and then just added a way to distinguish whether or not it was using the UI or the game aspect

Final Thoughts:

I’m very happy with how these projects both turned out respectively, i put a lot of hard work and effort into making these work properly on both devices and making these a better experience.

However I wish there would have been more time with both devices for everyone. This would have made testing for the Rift much easier and would have cut down on the amount of solo work that i had to do to complete these projects.